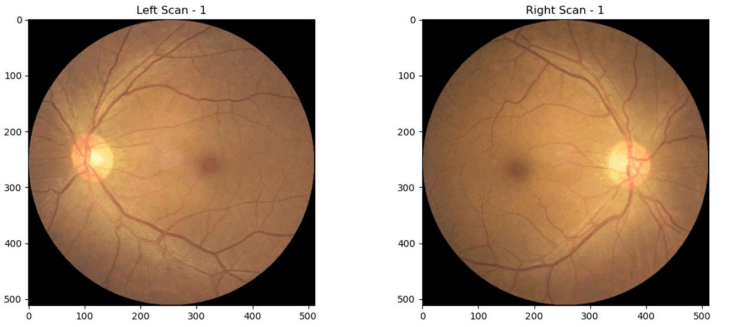

Sample Left and Right Fundus Scans

A Bit of Background

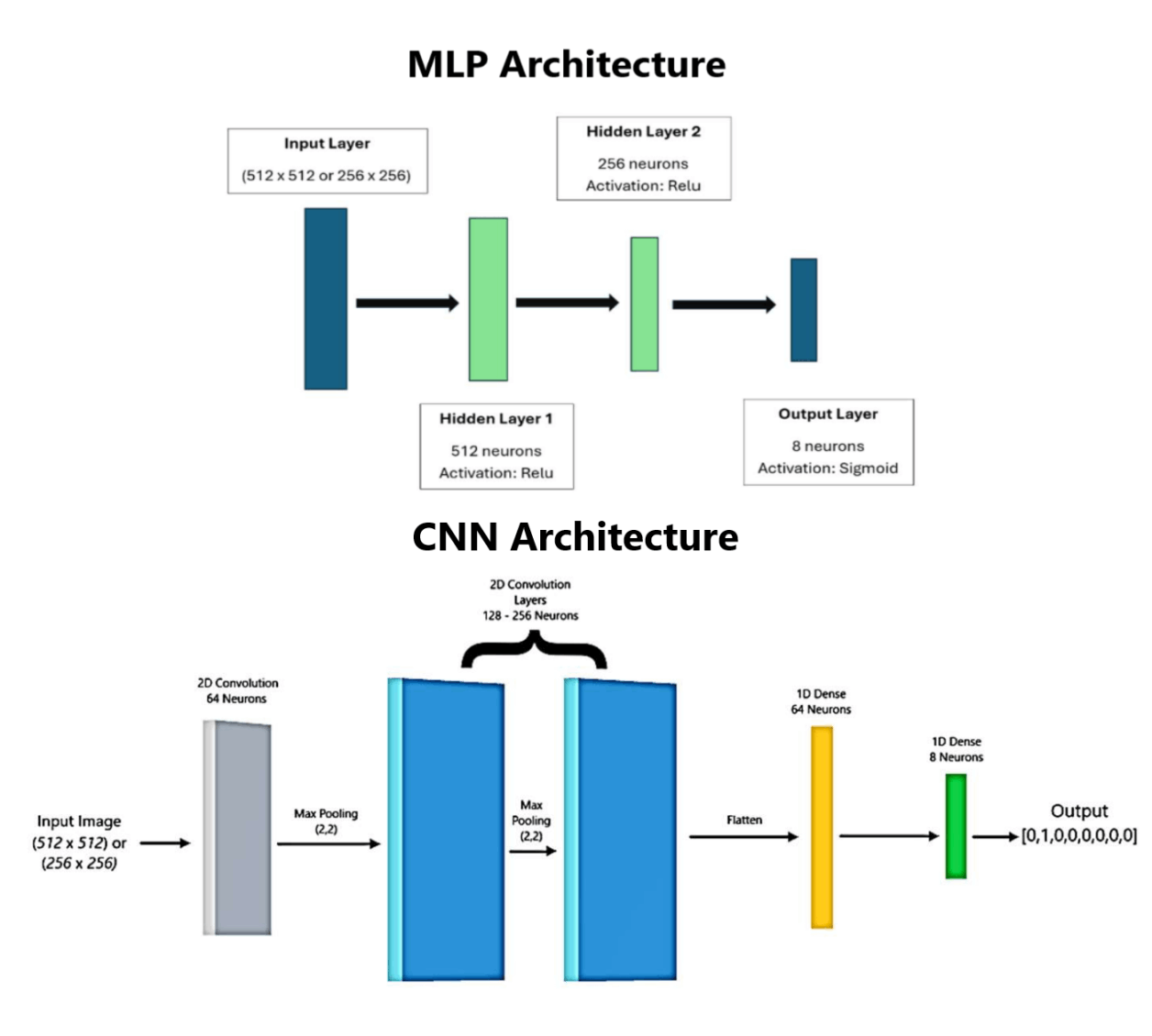

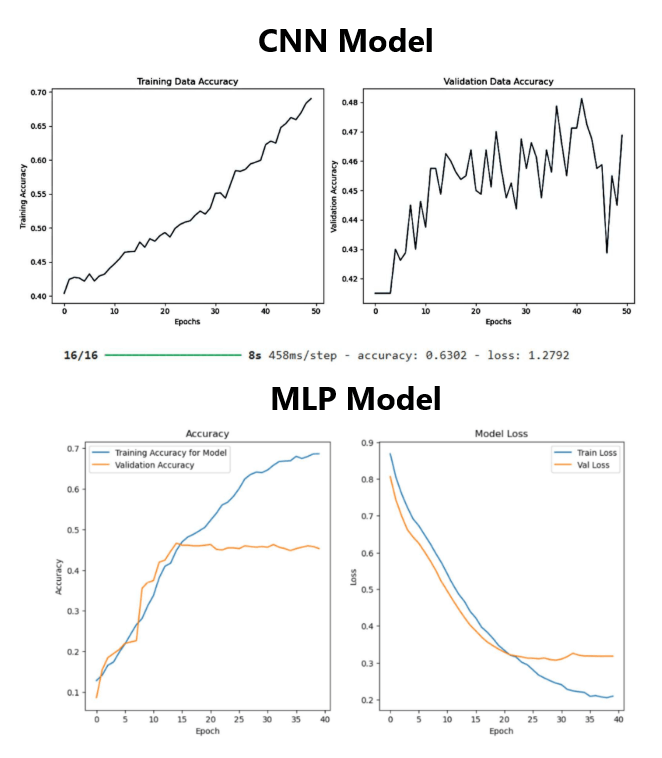

During my time at the University of Waterloo, I wrote a paper studying the applications for Convolutional Neural Networks (CNN's) and Multilayered Perceptrons (MLP's) for classifying ocular diseases from retinal fundus scans of patients. A link to the full paper can be found here: Research Paper.

Skills: Python, Convolutional Neural Networks, Image Processing, Data Cleansing, Model Training, Model Tuning, ADAM

Several models were trained on a rich dataset of 10,000 images varying the following parameters:

- Number of Images

- Image Resolution

- Number of Epochs

- Learning Rate

- Number of Hidden Layers

- Neuron Density The number of neurons in a layer

- Dropout

- Batch Size