Overview

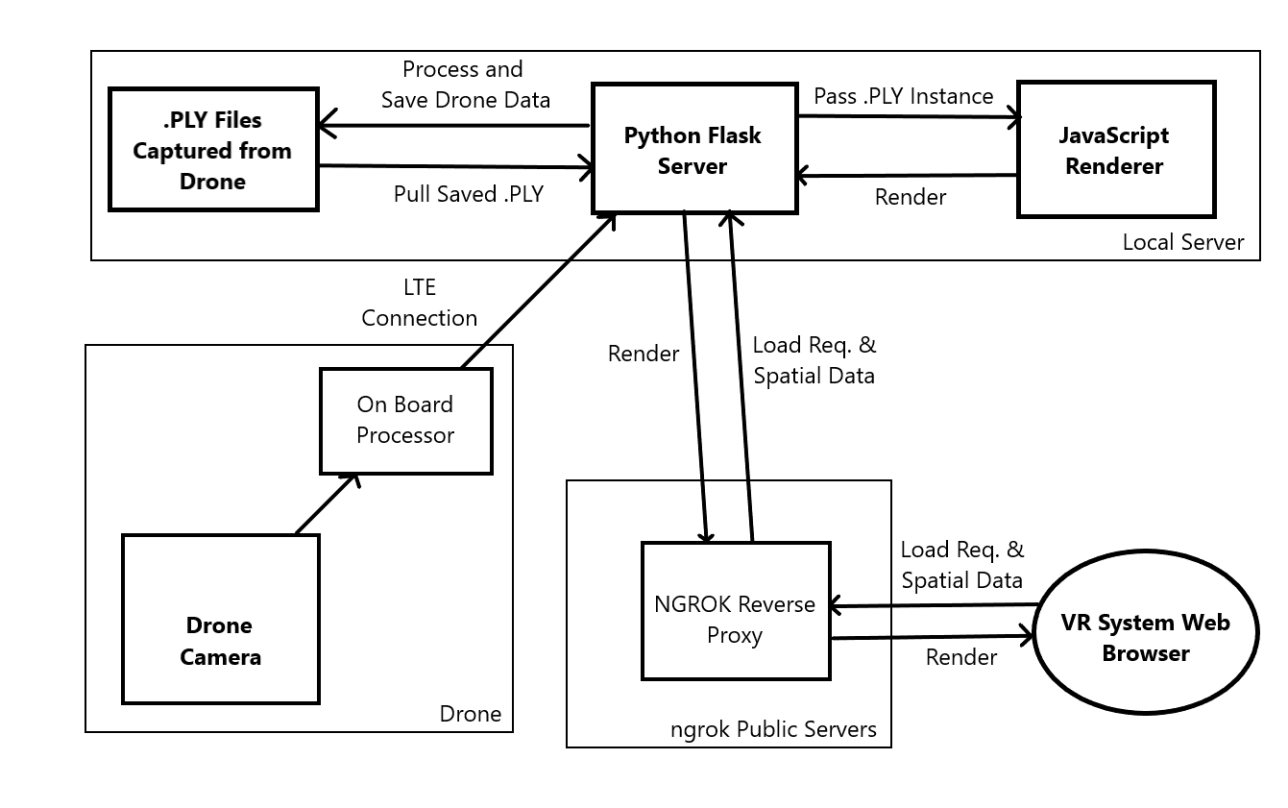

As part of drone surveying project called SkyMap, I developed and web-based point cloud/mesh rendering application that could be viewed in VR in near real-time. This program not only enabled viewing of points but also virtual measurements that could be scaled to reflect real-life distances.

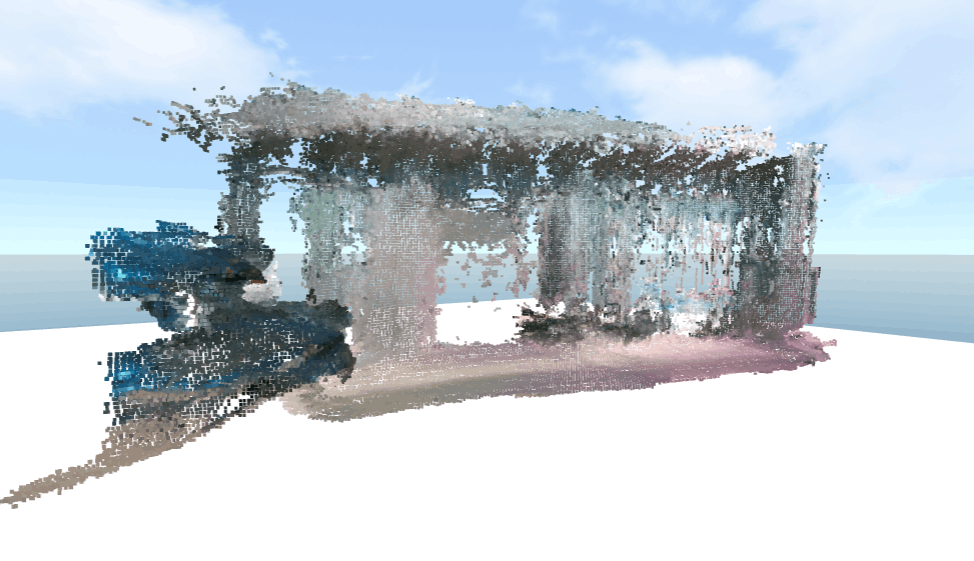

The reconstruction shown was data captured during a flight test outside of the University of Waterloo.

Skills: JavaScript, Python, HTML, Flask (Web Server), Point Clouds/Mesh Rendering, VR, Analog Controls, Dynamic File Loading